Sorting algorithms come in many different shapes and sizes, and they all have different runtimes. Runtimes are the length an algorithm takes to complete, mostly depending on the size of the list, in which case we will call 'n'. For example, the run time of a simple sort such as bubble sort takes n^2 steps. Let's analyse why.

Let's say we want to sort the list [8,2,9,6,7,3,1] from smallest to largest. Bubble sort works by looking at the first element in the list, and comparing it to the next adjacent one. If it's smaller than the one next to it, it will swap them. This goes down the list, and we do this step the same amount of times as there are elements in the list. So we sift through the list n times because we check each element, and we do that, n times, one for each element in the list, so we have n * n steps, or n^2 steps. Let's sort this list to see why.

[8,2,9,6,7,3,1]

[8,2,9,6,7,3,1] We look at the 8 and 2, 2 is smaller than 8, so we swap them.

^ ^

[2,8,9,6,7,3,1] Then we look at 8 and 9, 8 is smaller than 9 so don't do anything.

^ ^

[2,8,9,6,7,3,1] Then we look at 9 and 6, where we swap them because 6 is smaller than 8.

^ ^

[2,8,6,9,7,3,1] Then we look at 9 and 7, where 9 is greater than 7 so we swap them.

^ ^

[2,8,6,7,9,3,1] Then we look at 9 and 3, where 9 is greater than 3 so we swap them.

^ ^

[2,8,6,7,3,9,1] Then finally we look at 9 and 1, where 9 is greater than 1 so we swap them.

^ ^

Notice we did this step 7, times because there are 7 elements in the list. But we then start again and perform those 7 checks 7 times, so 7*7 steps, 49 steps. This is how we can analyse the runtime of a sorting algorithm.

There are many different types of sorts, with different runtime lengths. There`s runtime lengths of nlog(n), like quick or heapsort, which for our purposes is the most efficient sorting algorithm when using a standard list or scenario.

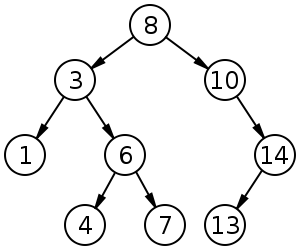

My personal experience with sorting and efficiency goes back to grade 11, and my introduction to computer science. We spent an entire unit simply discussing sorting algorithms and how to properly know which to use in certain situations as well as how to program them. A video we watched back then that we got to watch was Sorting out Sorting, a movie from 1981 which was created at University of Toronto. The video basically just goes over different types of sorts and how they execute but what I found the most interesting was during the credits, we get to see a time-lapse of a whole variety of sorts race against eachother in sorting these scattered dots. As we can see from the screenshot below, shellsort, quicksort and tree selection sort are already completed with heapsort close behind. These are because for an array of data with a large size, runtimes with logarithmic lengths will work wonders compared to ones with quadratic runtimes.

|

| https://www.youtube.com/watch?v=SJwEwA5gOkM |